What a thrill it was to attend this year’s Language Testing Research Colloquium (LTRC) at the University of Innsbruck! Our assessment research team looks forward to this gathering every year, and for good reason: not only are we able to share our own efforts to reform language assessment systems and research (this year’s theme), we get to connect with some of the brightest minds in the field—and do some incredible sightseeing while we’re at it!

We shared our work on digital testing

First up was Geoff LaFlair’s presentation, Big Data & Open Science: Balancing Collaboration, Privacy, and Protection. Geoff represented an industry perspective in the symposium on Open Science in language testing organized by chair and discussant—and former DET Doctoral Dissertation Award winner!—Dylan Burton, of the University of Illinois Urbana-Champaign. In an industry whose primary customers are test takers, Geoff explored how the open science movement could inadvertently place both proprietary and personal information at risk. He emphasized the need to establish clear guidelines for data sharing that respect and protect test takers and intellectual property rights.

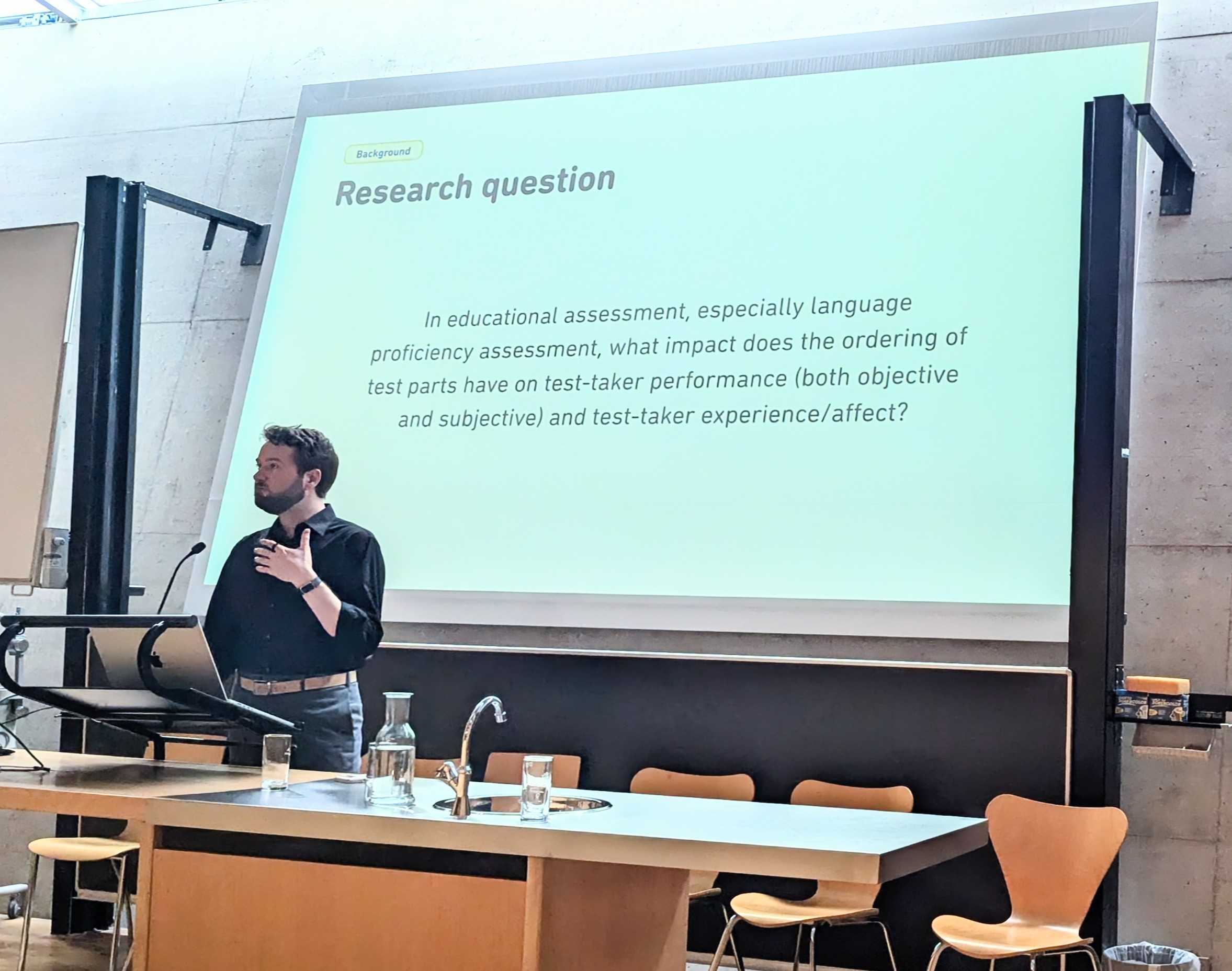

On Thursday, Ramsey Cardwell shared the literature review he conducted with Ben Naismith on the ordering of test components. They found that while there is extant research on how difficulty-based item ordering affects performance, as well as on anxiety as a mediating variable, there is an overwhelming lack of research on section ordering in adaptive testing and language proficiency testing contexts. They proposed several topics in need of further research in order to leverage the full potential of digital testing technology to create assessments that are accessible, equitable, inclusive, secure, and valid.

On Friday, Andrew Runge presented his work with fellow DET researchers Alina A. von Davier, Yigal Attali, Yena Park, Geoff LaFlair, and Jacqueline Church, on Human-Centered AI for Test Development. Human-Centered AI is a framework designed to emphasize the role of educators, experts, and students to ensure that AI systems enhance, rather than replace, the role of teachers and developers, allowing humans and machines each to do what they do best. It places a strong emphasis on transparency, fairness, and ethical considerations in educational AI. To demonstrate this framework in practice, they propose ‘the Item Factory,’ a new model that combines automation, AI, and engineering principles with human expertise to scale the item development process for high-volume, high-stakes testing in the digital era.

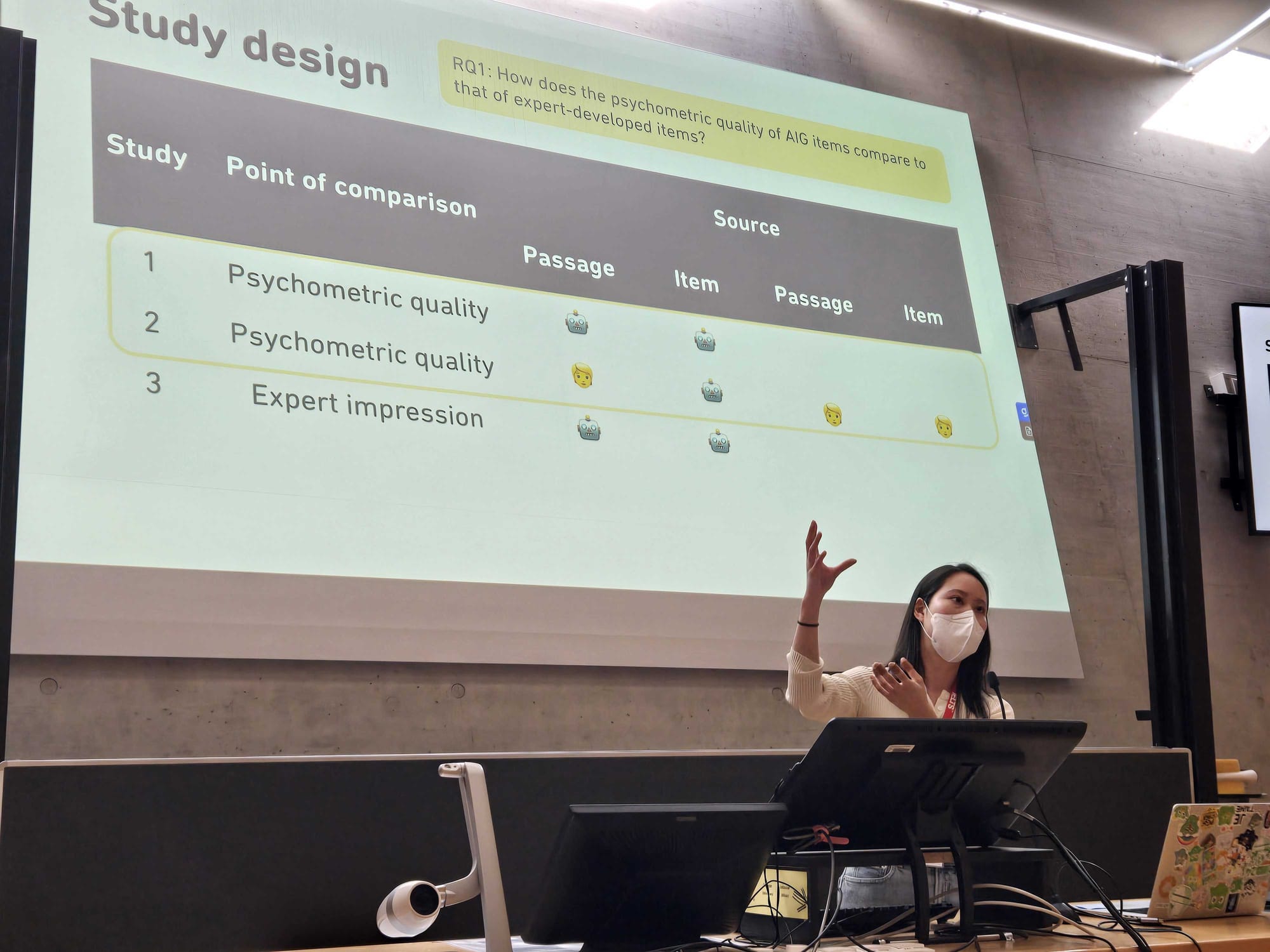

Immediately following Andrew’s presentation, Yena Park shared the paper she wrote with Jacqueline Church and Yigal Attali, Comparing item characteristics of human-written and GPT-4-written items: Can GPT write good items? Their work explores the potential for large language models (LLMs) to alleviate the resource-intensive, cognitively taxing nature of item writing. Comparing test taker responses to expert-written and automatically generated sets of items, they found that LLMs are indeed capable of producing items of comparable psychometric quality that facilitates test development at scale, while staying faithful to the item specification and item writing guidelines.

We learned from first-class researchers in the field

Of course, our researchers aren’t the only ones pursuing studies on innovation in digital testing! Ruslan Suvorov of the University of Western Ontario presented his paper, An eye-tracking study of response processes on C-test items in the Duolingo English Test. For all tests, and especially newer, adaptive tests like the DET, it’s important to understand how test takers interact with test items, and how their response processes are related to scores and item difficulty levels. Ruslan’s study leveraged eye tracking to examine these relationships. The findings revealed individual differences among L2 test takers’ response processes, highlighting the importance of complementing outcome-based evidence of language proficiency with process-based evidence.

Nicholas Coney and Daniel R. Isbell of the University of Hawaiʻi at Mānoa examined the English Language Proficiency (ELP) requirements for international students applying to research-intensive Carnegie R1 universities in the United States, in Where the Lines are Drawn: A Survey of English Proficiency Test Use in Admissions Among U.S. Research-Intensive Universities. In total, they found 32 different ELP tests being used for admissions, with the Duolingo English Test (DET) among the most common. Their study findings provide important context for evaluating test use in real-world higher education settings.

Given that Africa has the fastest growing and youngest population of any continent, Kadidja Koné of the Ecole Normale d’Enseignement Technique et Professionnel (ENETP), University of Letters and Humanities Bamako, Mali, and Paula Winke of Michigan State University believe that standardized international language testing must become more African, too. Their paper, Shedding Light on the Test-Taking Experiences of Francophone African Learners of English in High-Stakes English Proficiency Testing, investigated West African test takers’ beliefs and reflections after taking a standardized, international English test in Africa—namely, the DET. Their focus group explored how West African culture interplayed with test takers’ exam experiences, and revealed that these test takers’ motivations for testing went beyond university admissions to include diagnostic and formative purposes.

We honored emerging scholars

As current ILTA President Claudia Harsch said in her opening remarks, LTRC wouldn’t be LTRC without awards! Sure enough, the week ended with a banquet and awards ceremony at Villa Blanka, on the slopes of the Alps. Just before dinner was served, Geoff announced the 13 most recent winners of our annual doctoral dissertation awards, several of whom were in attendance!

Next, Claudia announced the winners of the ILTA/Duolingo Collaboration and Outreach Grants: Dusadee Rangseechatchawan of Chiang Mai Rajabhat University, for her paper Developing English language proficiency tests for Thai university students: From theories to practices; Freelance ELT Consultant Rama Mathew for her paper Promoting sustainable language assessment practices among middle school teachers in India; and Angel Arias of Carleton University and Jamie L. Schissel of University of North Carolina at Greensboro for their paper, Promoting meaningful assessment in the language classroom in the Dominican Republic.

Each of these winners receives funding from Duolingo to support their research. We’re so proud to play a small part in supporting these scholars, and look forward to seeing their work progress!

Thanks for an amazing conference!

We’re so grateful to the LTRC organizing team for putting on such a thought-provoking conference! Conference Chair Benjamin Kremmel and his team—Simone Baumgartinger, Kathrin Eberharter, Viktoria Ebner, Annette Giesinger, Elisa Guggenbichler, Eva Konrad, Doris Moser-Frötscher, Carol Spöttl, and Sigrid Hauser—thought of everything, and made us feel truly welcome at the University. We’ll be thinking about our experiences at this conference for a long while, and we’re already looking forward to next year!

To learn more about research into the Duolingo English Test, visit our publications!